Reaching the maximum possible precision is rarely required from a practical algorithm. In real-world data, modeling and measurement errors are usually several orders of magnitude larger than the errors that come from rounding floating-point numbers and such, and we are often perfectly happy with picking an approximate method that trades off precision for speed.

In this section, we introduce one of the most important building blocks in such approximate, numerical algorithms: Newton’s method.

#Newton’s Method

Newton’s method is a simple yet very powerful algorithm for finding approximate roots of real-valued functions, that is, the solutions to the following generic equation:

The only thing assumed about the function is that at least one root exists and that is continuous and differentiable on the search interval. There are also some boring corner cases, but they almost never occur in practice, so we will just informally say that the function is “good.”

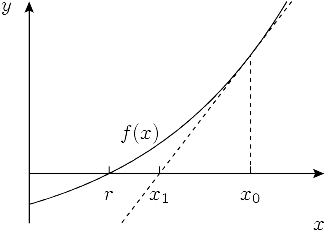

The main idea of the algorithm is to start with some initial approximation and then iteratively improve it by drawing the tangent to the graph of the function at and setting the next approximation equal to the -coordinate of its intersection with the -axis. The intuition is that if the function is “good” and is already close enough to the root, then will be even closer.

To obtain the point of intersection for , we need to equal its tangent line function to zero:

from which we deriveNewton’s method is very important: it is the basis of a wide range of optimization solvers in science and engineering.

#Square Root

As a simple example, let’s derive the algorithm for the problem of finding square roots:

If we substitute into the generic formula above, we can obtain the following update rule:In practice we also want to stop it as soon as it is close enough to the right answer, which we can simply check after each iteration:

const double EPS = 1e-9;

double sqrt(double n) {

double x = 1;

while (abs(x * x - n) > eps)

x = (x + n / x) / 2;

return x;

}

The algorithm converges for many functions, although it does so reliably and provably only for a certain subset of them (e.g., convex functions). Another question is how fast the convergence is, if it occurs.

#Rate of Convergence

Let’s run a few iterations of Newton’s method to find the square root of , starting with , and check how many digits it got correct after each iteration:

1.0000000000000000000000000000000000000000000000000000000000000 1.5000000000000000000000000000000000000000000000000000000000000 1.4166666666666666666666666666666666666666666666666666666666675 1.4142156862745098039215686274509803921568627450980392156862745 1.4142135623746899106262955788901349101165596221157440445849057 1.4142135623730950488016896235025302436149819257761974284982890 1.4142135623730950488016887242096980785696718753772340015610125 1.4142135623730950488016887242096980785696718753769480731766796

Looking carefully, we can see that the number of accurate digits approximately doubles on each iteration. This fantastic convergence rate is not a coincidence.

To analyze convergence rate quantitatively, we need to consider a small relative error on the -th iteration and determine how much smaller the error is on the next iteration:

We can express as . Plugging it into the Newton iteration formula and dividing both sides by we getHere we have Taylor-expanded at , using the assumption that the error is small (since the sequence converges, for sufficiently large ).

Rearranging for , we obtain

which means that the error roughly squares (and halves) on each iteration once we are close to the solution. Since the logarithm is roughly the number of accurate significant digits in the answer , squaring the relative error corresponds precisely to doubling the number of significant digits that we had observed.

This is known as quadratic convergence, and in fact, this is not limited to finding square roots. With detailed proof being left as an exercise to the reader, it can be shown that, in general

which results in at least quadratic convergence under a few additional assumptions, namely not being equal to and being continuous.